ChatGPT: The software that can do everything? Functional scope as well as opportunities and risks for companies

ChatGPT - GPT? This stands for "generative pre-trained transformer". This means that the software generates a chat and has been trained for it. Transformer refers to the architecture of the model, which uses neural networks to make each word interdependent. This ensures that the meaning of the question or task is correctly understood, weighted and the answer options narrowed down to provide a precise answer.

What can ChatGPT do?

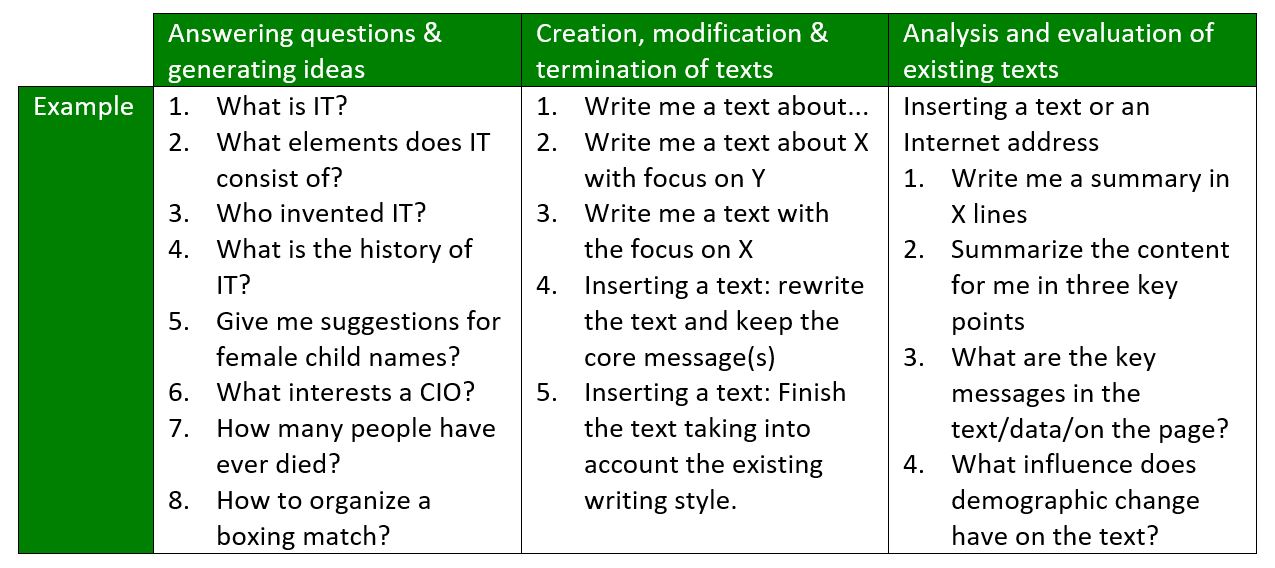

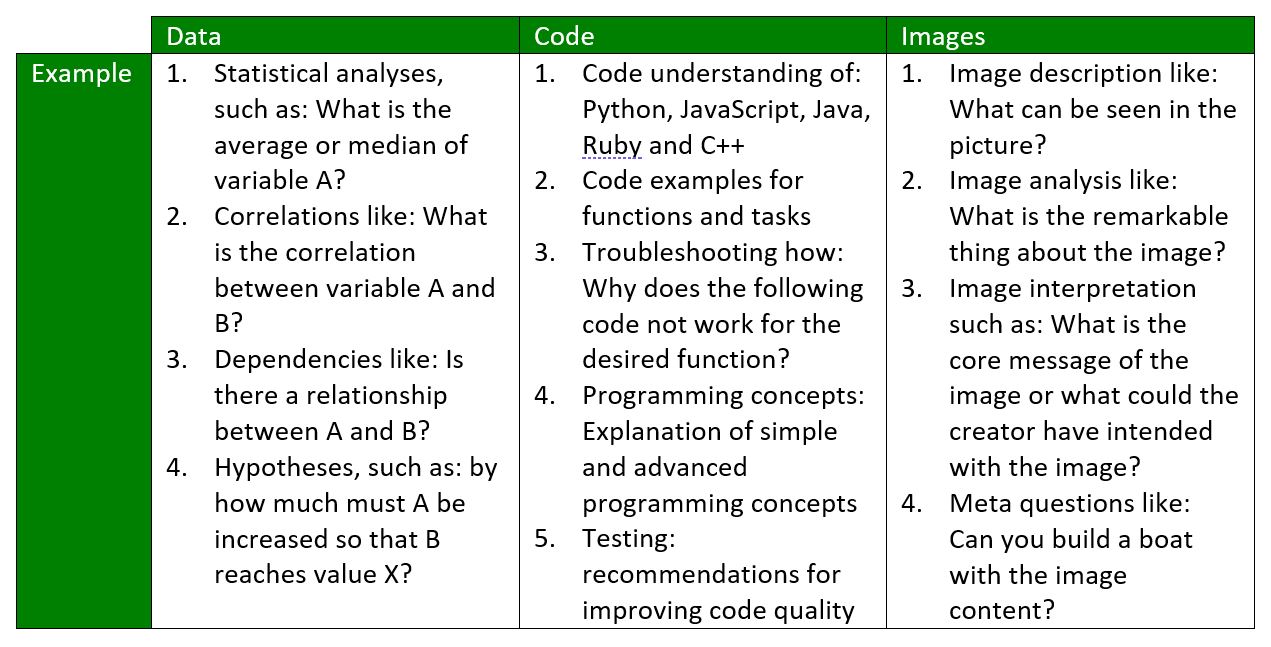

Few today understand the functional scope and especially the limitations of the software. Enclosed is an option to better grasp the software and understand its intended use.

Text generation: ChatGPT can create, analyze, terminate and translate any text into 46 languages:

The same applies with regard to the application to data, code and images. The condition for this is that the corresponding data is maintained in ChatGPT. For images, the corresponding functionality is available with ChatGPT-4, but not yet accessible:

What are the opportunities?

ChatGPT saves one thing above all: time. Time that people need to work their way through information, make statements and recognize the central derivations. The application scenarios are seemingly limitless, which also made it difficult to try to make the product's functional scope and limitations tangible.

What are the problems?

It is a phenomenon that has only been explained in rudimentary form to date that AI "understands" untruths as reality or truth and makes statements in response to questions that are obviously false - and in some cases invents sources. While some interpret it as a statistical phenomenon, others cite faulty or deficient data as the reason.

With regard to data volumes, developers often speak of so-called "data bias". This means that the AI has too little, incorrect or inadequate data and therefore generates incorrect answers. It is only as good as the data with which the algorithm is "fed" - IT specialists will be reminded of the "shit in - shit out" principle from the conventional software environment.

In particular, the limits of ChatGPT are of interest to companies for a risk assessment. Known problems exist, for example, when multiplying seven-digit or larger numbers, the results of which are usually incorrect. In addition, there are known "jailbreaks" that can be used to bypass ChatGPT to bypass the built-in policies. For example, "Dan" (Do anything now / a newly set personality of ChatGPT) is known to have expressed political opinions or been rude.

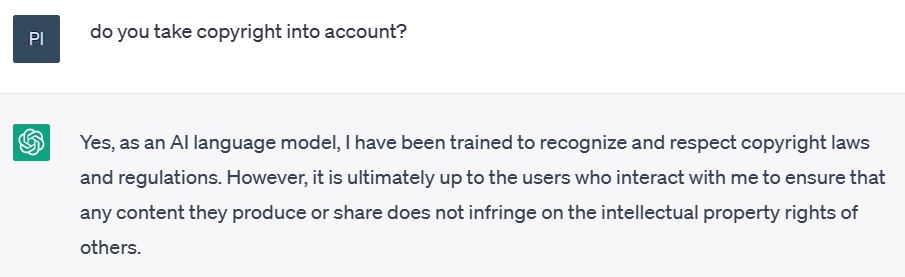

ChatGPT also stands out in the legal context - for example, in copyright law. This has to be looked at from two sides:

(1) do copyright infringements exist because ChatGPT has been trained with protected content AND

(2) who has the copyright on ChatGPT's answers?

According to current copyright law, a machine cannot be an author, only a human can, while you shrug at the first question - no one knows what the AI or Machine Learning algorithms have been trained with.

Ask ChatGPT directly:

This statement from ChatGPT quickly leads to privacy concerns. Italy became the first European country to temporarily suspend ChatGPT until April 30, 2023, for not disclosing OpenAI's sources for generating responses. "The point is that if I don't know who is doing what with my personal data, I won't be able to control the use of my personal data to exercise my rights," Italy's data protection commissioner Guido Scorza said recently. Meanwhile, the service is available again in Italy, as OpenAI now complies with a number of requirements set by the Italian data protection authority. In Germany, a fundamental ban is conceivable, but not intended, according to the German data protection commissioner. The statements of the Federal Ministry of Digital Affairs and Transport in Germany clearly point in the direction of a legal framework that is still to be plugged in.

At the EU level, the topic of AI and a binding legal framework have been under consideration since 2018. The so-called "AI Act" has not yet been decided, however, and a date for entry into force is planned for 2023.

What should companies do?

The use of ChatGPT has long been a reality in companies, but it should be subject to regulations that prevent the outflow of relevant information from the company.

Good food for thought in this regard is the Artificial Intelligence Risk Management Framework from the National Institute of Standards and Technology (NIST) in the US. The framework is a guide for organizations to protect their AI systems by minimizing risks through a systematic risk management and risk assessment methodology.

The framework consists of three main parts:

(1) the Management Framework,

(2) the Risk Management Process Framework, and

(3) the Risk Assessment Process Framework.

It is designed to help organizations identify, assess, and mitigate risks associated with AI systems in order to protect their AI systems and enterprise information.

NIST is considered a leader in AI regulations, so it will be worth taking a deeper look.

AI is here to stay, and waiting for politicians is not a satisfactory answer. Companies must regulate and systematize the handling of AI. This involves risks, but will become an important component of the work in companies.

Author: Christian Grabner